k3s部署过程

介绍

K3s集群分为k3s Server(控制平面)和k3s Agent(工作节点)。所有的组件都打包在单个二进制文件中。

运行环境

IP地址根据实际情况而定

步骤

# 关闭防火墙

systemctl disable firewalld --now

# 设置selinux(需要联网)

yum install -y container-selinux selinux-policy-base

yum install -y https://rpm.rancher.io/k3s/latest/common/centos/7/noarch/k3s-selinux-0.2-1.el7_8.noarch.rpm

每个主机都要执行以下操作:

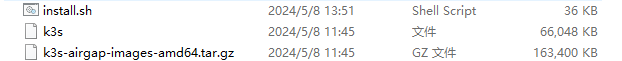

文件上传

# 打开目录

cd /home

# 安装上传工具

yum -y install lrzsz

# 上传三个文件

rz

在/home目录下上传这三个文件:【链接:https://pan.baidu.com/s/18xbh5Ofvg8-TqimXKZcLMQ 提取码:te58 】

处理

install.sh脚本

- 先下载脚本 or 复制脚本到install.sh

- 格式化

yum install dos2unix -y

dos2unix ./install.sh

移动文件

mv k3s /usr/local/bin

chmod +x /usr/local/bin/

mkdir -p /var/lib/rancher/k3s/agent/images/

cp ./k3s-airgap-images-amd64.tar.gz /var/lib/rancher/k3s/agent/images/

k8s-master【192.168.31.204】

#修改权限

chmod +x install.sh

#离线安装

INSTALL_K3S_SKIP_DOWNLOAD=true ./install.sh

#安装完成后,查看节点状态

kubectl get node

#查看token

cat /var/lib/rancher/k3s/server/node-token

k8s-work1 【192.168.31.180】和 k8s-work2【192.168.31.146】

INSTALL_K3S_SKIP_DOWNLOAD=true \

K3S_URL=https://192.168.31.204:6443 \

K3S_TOKEN=K10e6745f7fb6e204a4e2a8c5a12df4b3b2b3ff4b52034421c3ed1a168c3ef4be56::server:23e98714cdfd960face1a4064da62f7a \

./install.sh

k8s-master

安装完毕后执行

watch -n 1 kubectl get node

# 若安装失败,修改主机名称

vim /etc/hostname

# 删除默认主机名

kubectl delete node localhost.localdomain

查看 containerd 日志

ps -ef | grep containerd

cat /var/lib/rancher/k3s/agent/etc/containerd/config.toml

我们需要在每个节点上新建 /etc/rancher/k3s/registries.yaml 文件,配置内容如下:

mirrors:

docker.io:

endpoint:

- "https://fsp2sfpr.mirror.aliyuncs.com/"

配置镜像

cd /etc/rancher/k3s/

# 新建并插入镜像源

vim /etc/rancher/k3s/registries.yaml

# 重启 k8s-master

systemctl restart k3s

# 重启 k8s-work1和k8s-work2

systemctl restart k3s-agent

cat /var/lib/rancher/k3s/agent/etc/containerd/config.toml

创建和管理 Pod

kubectl run mynginx --image=nginx

# 查看Pod

kubectl get pod

# 描述

kubectl describe pod mynginx

# 查看Pod的运行日志

kubectl logs mynginx

# 显示pod的IP和运行节点信息

kubectl get pod -owide

# 使用Pod的ip+pod里面运行容器的端口

curl 10.42.4.5

#在容器中执行

kubectl exec mynginx -it -- /bin/bash

kubectl get po --watch

# -it 交互模式

# --rm 退出后删除容器,多用于执行一次性任务或使用客户端

kubectl run mynginx --image=nginx -it --rm -- /bin/bash

# 删除

kubectl delete pod mynginx

# 强制删除

kubectl delete pod mynginx --force

# 强制删除

kubectl get pod

kubectl delete pod label-demo -n default --force --grace-period=0

部署 和 副本集

**Deployment**是对ReplicaSet和Pod更高级的抽象。

它使Pod拥有多副本,自愈,扩缩容、滚动升级等能力。

ReplicaSet(副本集)是一个Pod的集合。

它可以设置运行Pod的数量,确保任何时间都有指定数量的 Pod 副本在运行。

通常我们不直接使用ReplicaSet,而是在Deployment中声明。

#创建deployment,部署3个运行nginx的Pod

kubectl create deployment nginx-deploy --image=nginx:1.22 --replicas=3

#查看deployment

kubectl get deploy

#查看replicaSet

kubectl get rs

#删除deployment

kubectl delete deploy nginx-deploy

自愈能力

–replicas=3 表示副本集的pod数量为3

[root@k8s-master k3s]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-855866bb46 3 3 3 16s

[root@k8s-master k3s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-855866bb46-h8ppk 1/1 Running 0 71s

nginx-deploy-855866bb46-7xxtb 1/1 Running 0 71s

nginx-deploy-855866bb46-cfc9c 1/1 Running 0 71s

删除其中一个pod,会自动 补齐 一个

[root@k8s-master k3s]# kubectl delete pod nginx-deploy-855866bb46-cfc9c

pod "nginx-deploy-855866bb46-cfc9c" deleted

[root@k8s-master k3s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-855866bb46-h8ppk 1/1 Running 0 4m16s

nginx-deploy-855866bb46-7xxtb 1/1 Running 0 4m16s

nginx-deploy-855866bb46-vk7lr 1/1 Running 0 9s

自动扩容

[root@k8s-master ~]# kubectl get replicaSet --watch

NAME DESIRED CURRENT READY AGE

nginx-deploy-855866bb46 3 3 3 9m41s

nginx-deploy-855866bb46 5 3 3 10m

nginx-deploy-855866bb46 5 3 3 10m

nginx-deploy-855866bb46 5 5 3 10m

nginx-deploy-855866bb46 5 5 4 10m

nginx-deploy-855866bb46 5 5 5 10m

#将副本数量调整为5

kubectl scale deploy nginx-deploy --replicas=5

kubectl get deploy

自动缩放

自动缩放通过增加和减少副本的数量,以保持所有 Pod 的平均 CPU 利用率不超过 75%。

自动伸缩需要声明Pod的资源限制,同时使用 Metrics Server 服务(K3s默认已安装)。

#自动缩放

kubectl autoscale deployment/nginx-auto --min=3 --max=10 --cpu-percent=75

#查看自动缩放

kubectl get hpa

#删除自动缩放

kubectl delete hpa nginx-deployment

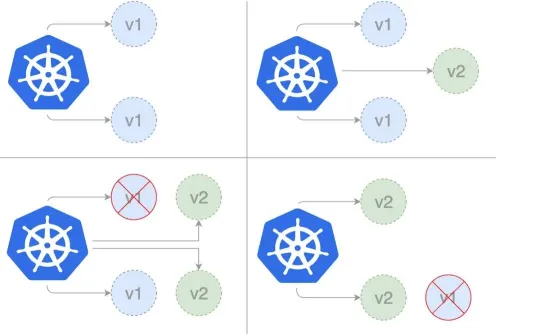

滚动更新

#查看版本和Pod

[root@k8s-master ~]# kubectl get deploy -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deploy 3/3 3 3 16m nginx nginx:1.22 app=nginx-deploy

#更新容器镜像

kubectl set image deploy/nginx-deploy nginx=nginx:1.23

#查看过程

[root@k8s-master ~]# kubectl get rs --watch

NAME DESIRED CURRENT READY AGE

nginx-deploy-855866bb46 3 3 3 22m

nginx-deploy-7c9c6446b7 0 0 0 2m27s

nginx-deploy-7c9c6446b7 0 0 0 2m34s

nginx-deploy-7c9c6446b7 1 0 0 2m34s

nginx-deploy-7c9c6446b7 1 0 0 2m34s

nginx-deploy-7c9c6446b7 1 1 0 2m34s

nginx-deploy-7c9c6446b7 1 1 1 2m36s

nginx-deploy-855866bb46 2 3 3 23m

nginx-deploy-7c9c6446b7 2 1 1 2m36s

nginx-deploy-7c9c6446b7 2 1 1 2m36s

nginx-deploy-855866bb46 2 3 3 23m

nginx-deploy-855866bb46 2 2 2 23m

nginx-deploy-7c9c6446b7 2 2 1 2m36s

nginx-deploy-7c9c6446b7 2 2 2 2m38s

nginx-deploy-855866bb46 1 2 2 23m

nginx-deploy-855866bb46 1 2 2 23m

nginx-deploy-7c9c6446b7 3 2 2 2m38s

nginx-deploy-855866bb46 1 1 1 23m

nginx-deploy-7c9c6446b7 3 2 2 2m38s

nginx-deploy-7c9c6446b7 3 3 2 2m38s

nginx-deploy-7c9c6446b7 3 3 3 2m39s

nginx-deploy-855866bb46 0 1 1 23m

nginx-deploy-855866bb46 0 1 1 23m

nginx-deploy-855866bb46 0 0 0 23m

[root@k8s-master ~]# kubectl get deploy -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deploy 3/3 3 3 26m nginx nginx:1.23 app=nginx-deploy

版本回滚

#查看历史版本

[root@k8s-master k3s]# kubectl rollout history deploy/nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

3 <none>

4 <none>

[root@k8s-master ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-7c9c6446b7 3 3 3 13m

nginx-deploy-855866bb46 0 0 0 33m

#查看指定版本的信息

[root@k8s-master k3s]# kubectl rollout history deploy/nginx-deploy --revision=3

deployment.apps/nginx-deploy with revision #3

Pod Template:

Labels: app=nginx-deploy

pod-template-hash=855866bb46

Containers:

nginx:

Image: nginx:1.22

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

#回滚到历史版本

[root@k8s-master k3s]# kubectl rollout undo deploy/nginx-deploy --to-revision=3

deployment.apps/nginx-deploy rolled back

[root@k8s-master ~]# kubectl get rs --watch

NAME DESIRED CURRENT READY AGE

nginx-deploy-7c9c6446b7 3 3 3 14m

nginx-deploy-855866bb46 0 0 0 34m

nginx-deploy-855866bb46 0 0 0 35m

nginx-deploy-855866bb46 1 0 0 35m

nginx-deploy-855866bb46 1 0 0 35m

nginx-deploy-855866bb46 1 1 0 35m

nginx-deploy-855866bb46 1 1 1 35m

nginx-deploy-7c9c6446b7 2 3 3 15m

nginx-deploy-855866bb46 2 1 1 35m

nginx-deploy-7c9c6446b7 2 3 3 15m

nginx-deploy-855866bb46 2 1 1 35m

nginx-deploy-7c9c6446b7 2 2 2 15m

nginx-deploy-855866bb46 2 2 1 35m

nginx-deploy-855866bb46 2 2 2 35m

nginx-deploy-7c9c6446b7 1 2 2 15m

nginx-deploy-855866bb46 3 2 2 35m

nginx-deploy-7c9c6446b7 1 2 2 15m

nginx-deploy-7c9c6446b7 1 1 1 15m

nginx-deploy-855866bb46 3 2 2 35m

nginx-deploy-855866bb46 3 3 2 35m

nginx-deploy-855866bb46 3 3 3 35m

nginx-deploy-7c9c6446b7 0 1 1 15m

nginx-deploy-7c9c6446b7 0 1 1 15m

nginx-deploy-7c9c6446b7 0 0 0 15m

[root@k8s-master k3s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-855866bb46-4h5jn 1/1 Running 0 2m28s

nginx-deploy-855866bb46-kfq2x 1/1 Running 0 2m27s

nginx-deploy-855866bb46-9xf98 1/1 Running 0 2m26s

Service服务

Service是将运行在一组 Pods 上的应用程序公开为网络服务的抽象方法。

Service为一组 Pod 提供相同的 DNS 名,并且在它们之间进行负载均衡。

Kubernetes 为 Pod 提供分配了IP 地址,但IP地址可能会发生变化。

集群内的容器可以通过service名称访问服务,而不需要担心Pod的IP发生变化。

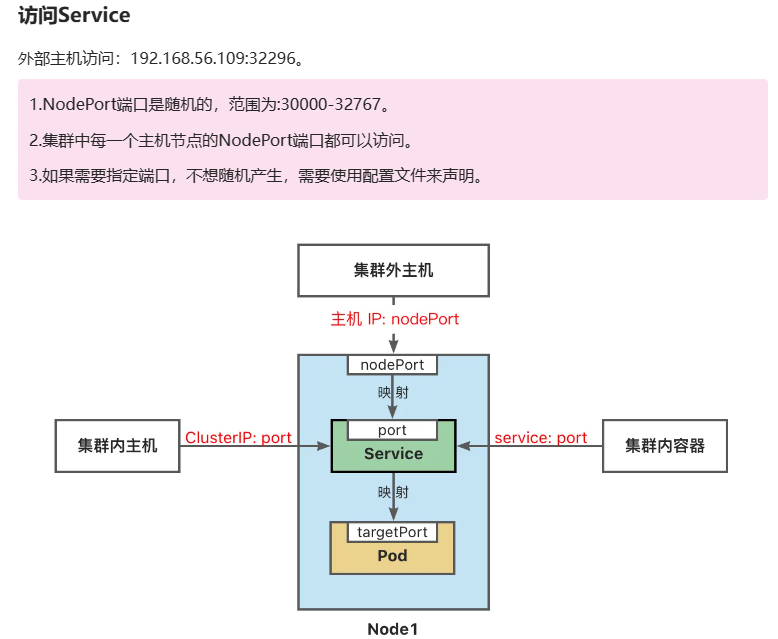

Type取值

ClusterIP:将服务公开在集群内部。kubernetes会给服务分配一个集群内部的 IP,集群内的所有主机都可以通过这个Cluster-IP访问服务。集群内部的Pod可以通过service名称访问服务。- NodePort:通过每个节点的主机IP 和静态端口(

NodePort)暴露服务。 集群的外部主机可以使用节点IP和NodePort访问服务。 - ExternalName:将集群外部的网络引入集群内部。

- LoadBalancer:使用云提供商的负载均衡器向外部暴露服务。

# port是service访问端口,target-port是Pod端口

# 二者通常是一样的

kubectl expose deploy/nginx-deploy \

--name=nginx-service --type=ClusterIP --port=80 --target-port=80

# 随机产生主机端口

kubectl expose deploy/nginx-deploy \

--name=nginx-service --type=NodePort --port=8080 --target-port=80

查看信息

[root@k8s-master k3s]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 3/3 3 3 48m

[root@k8s-master k3s]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-deploy-855866bb46-4h5jn 1/1 Running 0 13m

pod/nginx-deploy-855866bb46-kfq2x 1/1 Running 0 13m

pod/nginx-deploy-855866bb46-9xf98 1/1 Running 0 13m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 5d

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deploy 3/3 3 3 48m

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deploy-855866bb46 3 3 3 48m

replicaset.apps/nginx-deploy-7c9c6446b7 0 0 0 28m

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/nginx-deploy Deployment/nginx-deploy <unknown>/75% 3 10 3 3h41m

# port是service访问端口,target-port是Pod端口

[root@k8s-master k3s]# kubectl expose deploy/nginx-deploy --name=nginx-service --port=8080 --target-port=80

service/nginx-service exposed

[root@k8s-master k3s]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 5d

nginx-service ClusterIP 10.43.177.31 <none> 8080/TCP 2m40s

集群内访问

[root@k8s-master k3s]# curl 10.43.177.31:8080

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

容器内访问

[root@k8s-master k3s]# kubectl run test -it --image=nginx:1.22 --rm -- bash

If you don't see a command prompt, try pressing enter.

root@test:/# curl nginx-service:8080

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k8s-master ~]# kubectl describe service nginx-service

Name: nginx-service

Namespace: default

Labels: app=nginx-deploy

Annotations: <none>

Selector: app=nginx-deploy

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.43.177.31

IPs: 10.43.177.31

Port: <unset> 8080/TCP

TargetPort: 80/TCP

Endpoints: 10.42.1.12:80,10.42.2.16:80,10.42.4.13:80

Session Affinity: None

Events: <none>

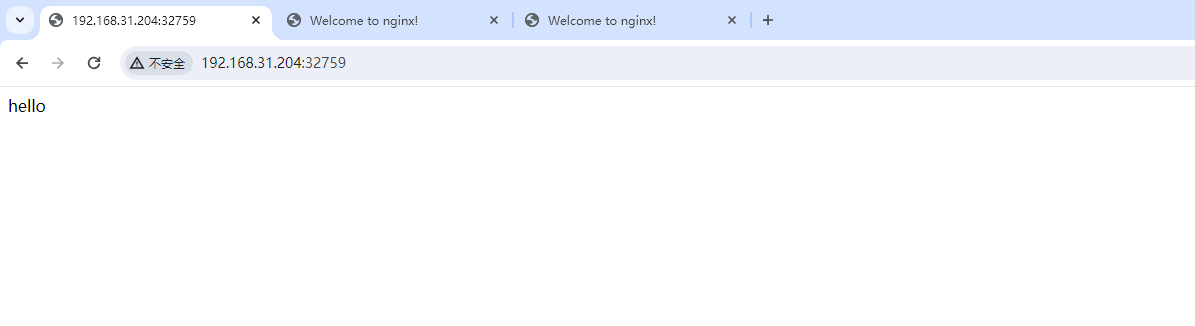

进入其中一个pod并修改文件

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-855866bb46-4h5jn 1/1 Running 0 43m

nginx-deploy-855866bb46-kfq2x 1/1 Running 0 43m

nginx-deploy-855866bb46-9xf98 1/1 Running 0 43m

test 1/1 Running 0 19m

[root@k8s-master ~]# kubectl exec -it nginx-deploy-855866bb46-9xf98 -- bash

root@nginx-deploy-855866bb46-9xf98:/# cd /usr/share/nginx/html/

root@nginx-deploy-855866bb46-9xf98:/usr/share/nginx/html# ls

50x.html index.html

root@nginx-deploy-855866bb46-9xf98:/usr/share/nginx/html# echo hello > index.html

root@nginx-deploy-855866bb46-9xf98:/usr/share/nginx/html# exit

exit

Pod在后端实现了负载均衡

[root@k8s-master ~]# curl 10.43.177.31:8080

hello

[root@k8s-master ~]# curl 10.43.177.31:8080

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

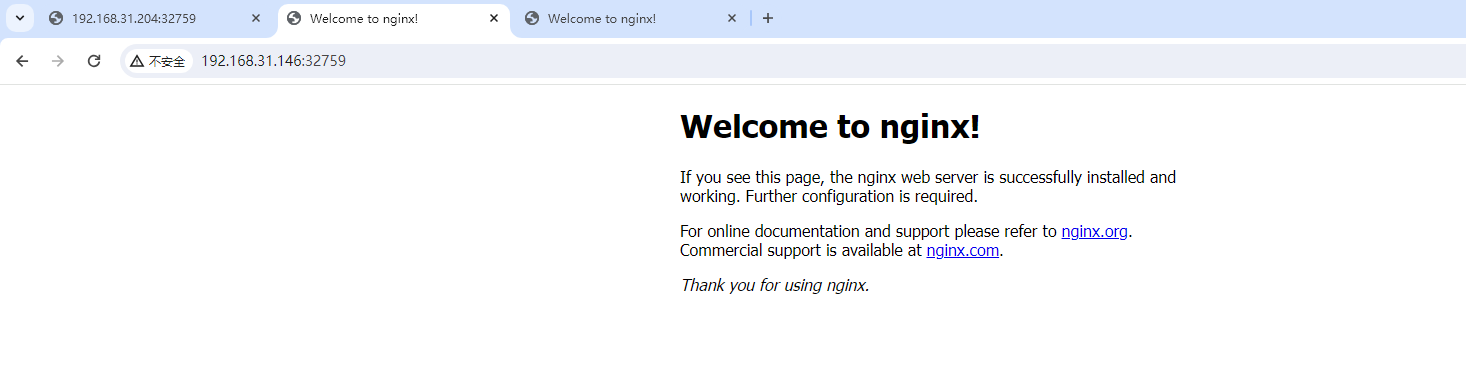

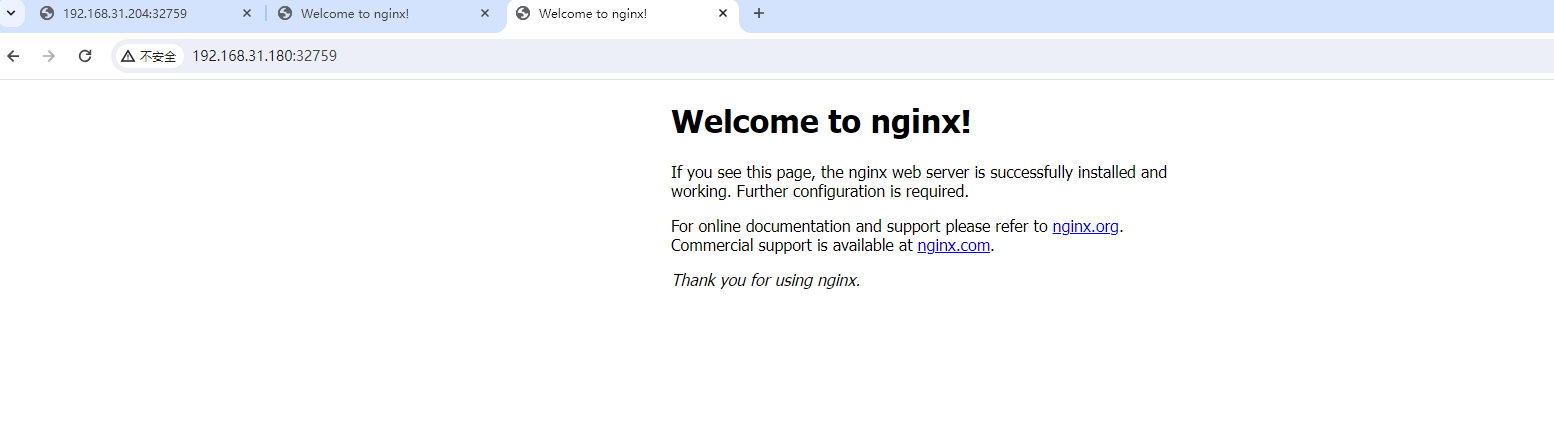

主机外部访问

k8s-master

k8s-work1

k8s-work2

命名空间

**命名空间(Namespace)**是一种资源隔离机制,将同一集群中的资源划分为相互隔离的组。

命名空间可以在多个用户之间划分集群资源(通过资源配额)。

- 例如我们可以设置开发、测试、生产等多个命名空间。

同一命名空间内的资源名称要唯一,但跨命名空间时没有这个要求。

初始命名空间

Kubernetes 会创建四个初始命名空间:

**default**默认的命名空间,不可删除,未指定命名空间的对象都会被分配到default中。**kube-system**Kubernetes 系统对象(控制平面和Node组件)所使用的命名空间。**kube-public**自动创建的公共命名空间,所有用户(包括未经过身份验证的用户)都可以读取它。通常我们约定,将整个集群中公用的可见和可读的资源放在这个空间中。**kube-node-lease**租约(Lease)对象使用的命名空间。每个节点都有一个关联的 lease 对象,lease 是一种轻量级资源。lease对象通过发送心跳,检测集群中的每个节点是否发生故障。

使用命名空间

命名空间是在多个用户之间划分集群资源的一种方法(通过资源配额)。

例如我们可以设置开发、测试、生产等多个命名空间。

不必使用多个命名空间来分隔轻微不同的资源。

例如同一软件的不同版本: 应该使用标签 来区分同一命名空间中的不同资源。

命名空间适用于跨多个团队或项目的场景。

对于只有几到几十个用户的集群,可以不用创建命名空间。

命名空间不能相互嵌套,每个 Kubernetes 资源只能在一个命名空间中。

[root@k8s-master ~]# kubectl get namespace

NAME STATUS AGE

kube-system Active 5d2h

default Active 5d2h

kube-public Active 5d2h

kube-node-lease Active 5d2h

[root@k8s-master ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system local-path-provisioner-5b5579c644-fkld2 1/1 Running 1 (7h4m ago) 5d2h

kube-system svclb-traefik-d5e6bea7-n2h4m 2/2 Running 2 (7h4m ago) 5d2h

kube-system traefik-7d647b7597-jkjht 1/1 Running 1 (7h4m ago) 5d2h

default nginx-deploy-855866bb46-9xf98 1/1 Running 0 160m

kube-system svclb-traefik-d5e6bea7-j9pfd 2/2 Running 2 (7h4m ago) 5d2h

kube-system svclb-traefik-d5e6bea7-pkvcq 2/2 Running 3 (7h3m ago) 5d2h

kube-system metrics-server-74474969b-qhr2l 1/1 Terminating 1 (7h4m ago) 5d2h

default nginx-deploy-855866bb46-kfq2x 1/1 Terminating 0 160m

kube-system coredns-75fc8f8fff-bmtfn 1/1 Terminating 1 (7h4m ago) 5d2h

kube-system coredns-75fc8f8fff-56mdr 0/1 Running 0 30m

default nginx-deploy-855866bb46-zzqwb 1/1 Running 0 30m

default nginx-deploy-855866bb46-4h5jn 1/1 Terminating 0 160m

default nginx-deploy-855866bb46-sv44f 1/1 Running 0 30m

kube-system metrics-server-74474969b-qbrg7 0/1 CrashLoopBackOff 9 (4m34s ago) 30m

[root@k8s-master ~]# kubectl get lease -A

NAMESPACE NAME HOLDER AGE

kube-node-lease k8s-work1 k8s-work1 5d2h

kube-node-lease k8s-work2 k8s-work2 5d2h

kube-node-lease k8s-master k8s-master 5d2h

#创建命名空间

kubectl create namespace dev

[root@k8s-master ~]# kubectl create ns develop

namespace/develop created

#查看命名空间

kubectl get ns

#在命名空间内运行Pod

kubectl run nginx --image=nginx --namespace=dev

kubectl run my-nginx --image=nginx -n=dev

[root@k8s-master ~]# kubectl run nginx --image=nginx:1.22 -n=develop

pod/nginx created

#查看命名空间内的Pod

kubectl get pods -n=dev

[root@k8s-master ~]# kubectl get pod -n=develop

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 44s

# 默认查看default

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-855866bb46-9xf98 1/1 Running 0 177m

nginx-deploy-855866bb46-kfq2x 1/1 Terminating 0 177m

nginx-deploy-855866bb46-zzqwb 1/1 Running 0 48m

nginx-deploy-855866bb46-4h5jn 1/1 Terminating 0 177m

nginx-deploy-855866bb46-sv44f 1/1 Running 0 48m

#查看当前上下文

kubectl config current-context

# 修改默认命名空间

[root@k8s-master ~]# kubectl config set-context $(kubectl config current-context) --namespace=develop

Context "default" modified.

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 12m

#查看命名空间内所有对象

kubectl get all

# 删除命名空间会删除命名空间下的所有内容

kubectl delete ns dev

声明式配置

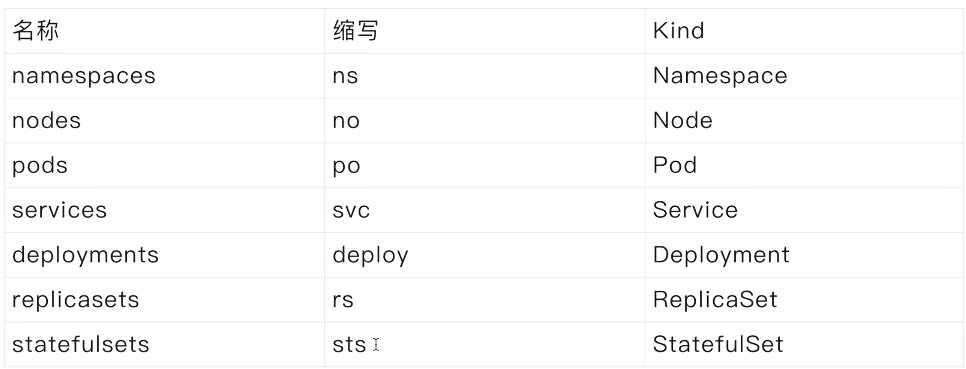

常用命令缩写

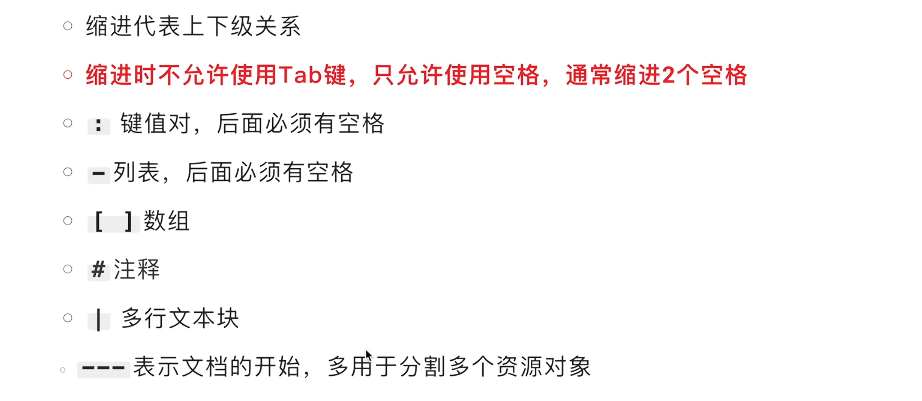

YAML规范

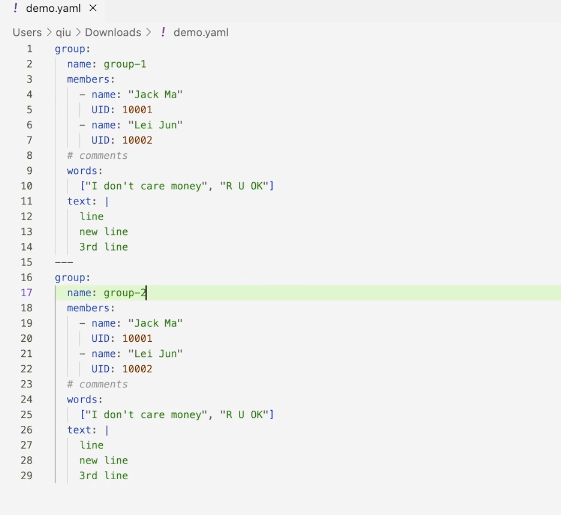

案例:

配置对象

参考文档:Pod | Kubernetes

[root@k8s-master home]# vim my-pod.yaml

[root@k8s-master home]# kubectl apply -f my-pod.yaml

pod/nginx created

[root@k8s-master home]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-855866bb46-9xf98 1/1 Running 0 18h

nginx-deploy-855866bb46-zzqwb 1/1 Running 0 16h

nginx-deploy-855866bb46-sv44f 1/1 Running 0 16h

nginx 1/1 Running 0 22s

[root@k8s-master home]# kubectl delete -f my-pod.yaml

pod "nginx" deleted

#创建对象

kubectl apply -f my-pod.yaml

#编辑对象

kubectl edit nginx

#删除对象

kubectl delete -f my-pod.yaml

my-pod.yaml:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.22

ports:

- containerPort: 80

标签

标签(Labels) 是附加到对象(比如 Pod)上的键值对,用于补充对象的描述信息。

标签使用户能够以松散的方式管理对象映射,而无需客户端存储这些映射。

由于一个集群中可能管理成千上万个容器,我们可以使用标签高效的进行选择和操作容器集合。

键的格式:

- 前缀(可选)/名称(必须)。

有效名称和值:

必须为 63 个字符或更少(可以为空)

如果不为空,必须以字母数字字符([a-z0-9A-Z])开头和结尾

包含破折号

**-**、下划线**_**、点**.**和字母或数字

label-pod.yaml:

apiVersion: v1

kind: Pod

metadata:

name: label-demo

labels: #定义Pod标签

environment: test

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.22

ports:

- containerPort: 80

[root@k8s-master home]# vim label-pod.yaml

[root@k8s-master home]# kubectl apply -f label-pod.yaml

pod/label-demo created

[root@k8s-master home]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-855866bb46-9xf98 1/1 Running 0 18h

nginx-deploy-855866bb46-kfq2x 1/1 Terminating 0 18h

nginx-deploy-855866bb46-zzqwb 1/1 Running 0 16h

nginx-deploy-855866bb46-4h5jn 1/1 Terminating 0 18h

nginx-deploy-855866bb46-sv44f 1/1 Running 0 16h

label-demo 1/1 Running 0 12s

[root@k8s-master home]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-deploy-855866bb46-9xf98 1/1 Running 0 18h app=nginx-deploy,pod-template-hash=855866bb46

nginx-deploy-855866bb46-kfq2x 1/1 Terminating 0 18h app=nginx-deploy,pod-template-hash=855866bb46

nginx-deploy-855866bb46-zzqwb 1/1 Running 0 16h app=nginx-deploy,pod-template-hash=855866bb46

nginx-deploy-855866bb46-4h5jn 1/1 Terminating 0 18h app=nginx-deploy,pod-template-hash=855866bb46

nginx-deploy-855866bb46-sv44f 1/1 Running 0 16h app=nginx-deploy,pod-template-hash=855866bb46

label-demo 1/1 Running 0 33s app=nginx,environment=test

[root@k8s-master home]# kubectl get pod -l "app=nginx"

NAME READY STATUS RESTARTS AGE

label-demo 1/1 Running 0 60s

[root@k8s-master home]# kubectl get pod -l "app=nginx,environment=test"

NAME READY STATUS RESTARTS AGE

label-demo 1/1 Running 0 108s

选择器

标签选择器 可以识别一组对象。标签不支持唯一性。

标签选择器最常见的用法是为Service选择一组Pod作为后端。

Service配置模板:

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

type: NodePort

selector: #与Pod的标签一致

environment: test

app: nginx

ports:

# 默认情况下,为了方便起见,`targetPort` 被设置为与 `port` 字段相同的值。

- port: 80

targetPort: 80

# 可选字段

# 默认情况下,为了方便起见,Kubernetes 控制平面会从某个范围内分配一个端口号(默认:30000-32767)

nodePort: 30007

实操:

[root@k8s-master home]# vim my-service.yaml

[root@k8s-master home]# kubectl apply -f my-service.yaml

service/my-service created

[root@k8s-master home]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 5d18h

nginx-service ClusterIP 10.43.177.31 <none> 8080/TCP 18h

nginx-outside NodePort 10.43.184.94 <none> 8081:32759/TCP 17h

my-service NodePort 10.43.203.191 <none> 80:30007/TCP 58s

[root@k8s-master home]# kubectl describe svc/my-service

Name: my-service

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx,environment=test

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.43.203.191

IPs: 10.43.203.191

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30007/TCP

Endpoints: 10.42.1.19:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

[root@k8s-master home]# kubectl get pod -l "app=nginx" -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

label-demo 1/1 Running 0 20m 10.42.1.19 k8s-master <none> <none>

标签选择运算

目前支持两种类型的选择运算:基于等值的和基于集合的。

多个选择条件使用逗号分隔,相当于*And(&&*)**运算。

等值选择

selector: matchLabels: # component=redis && version=7.0 component: redis version: 7.0集合选择

selector: matchExpressions: # tier in (cache, backend) && environment not in (dev, prod) - {key: tier, operator: In, values: [cache, backend]} - {key: environment, operator: NotIn, values: [dev, prod]}

容器运行时与镜像导出

准备镜像

[root@k8s-master home]# docker pull alpine:3.15

3.15: Pulling from library/alpine

d078792c4f91: Pull complete

Digest: sha256:19b4bcc4f60e99dd5ebdca0cbce22c503bbcff197549d7e19dab4f22254dc864

Status: Downloaded newer image for alpine:3.15

docker.io/library/alpine:3.15

[root@k8s-master home]# docker save alpine:3.15 > alpine-3.15.tar

[root@k8s-master home]# ls -lh

total 166M

-rw-r--r--. 1 root root 5.7M May 13 19:44 alpine-3.15.tar

-rwxrwxrwx. 1 root root 35K May 8 00:18 install.sh

-rw-rw-rw-. 1 root root 160M May 7 20:45 k3s-airgap-images-amd64.tar.gz

-rw-r--r--. 1 root root 211 May 13 18:37 label-pod.yaml

drwx------. 15 master master 4.0K May 13 02:04 master

-rw-r--r--. 1 root root 143 May 13 18:22 my-pod.yaml

-rw-r--r--. 1 root root 217 May 13 18:53 my-service.yaml

导入镜像

-n k8s.io:导入到 k8s.io 命名空间下

[root@k8s-master home]# crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/library/nginx 1.22 0f8498f13f3ad 57MB

docker.io/library/nginx 1.23 a7be6198544f0 57MB

docker.io/rancher/klipper-helm v0.7.3-build20220613 38b3b9ad736af 239MB

docker.io/rancher/klipper-lb v0.3.5 dbd43b6716a08 8.51MB

docker.io/rancher/local-path-provisioner v0.0.21 fb9b574e03c34 35.3MB

docker.io/rancher/mirrored-coredns-coredns 1.9.1 99376d8f35e0a 49.7MB

docker.io/rancher/mirrored-library-busybox 1.34.1 c98db043bed91 1.47MB

docker.io/rancher/mirrored-library-traefik 2.6.2 72463d8000a35 103MB

docker.io/rancher/mirrored-metrics-server v0.5.2 f73640fb50619 65.7MB

docker.io/rancher/mirrored-pause 3.6 6270bb605e12e 686kB

[root@k8s-master home]# ctr -n k8s.io images import alpine-3.15.tar --platform linux/amd64

unpacking docker.io/library/alpine:3.15 (sha256:f85c40d9246ab4ff746abe8314c8c22d9720241bcf2244cabca3f66590a5fdd0)...done

[root@k8s-master home]# crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/library/alpine 3.15 32b91e3161c8f 5.88MB

docker.io/library/nginx 1.22 0f8498f13f3ad 57MB

docker.io/library/nginx 1.23 a7be6198544f0 57MB

docker.io/rancher/klipper-helm v0.7.3-build20220613 38b3b9ad736af 239MB

docker.io/rancher/klipper-lb v0.3.5 dbd43b6716a08 8.51MB

docker.io/rancher/local-path-provisioner v0.0.21 fb9b574e03c34 35.3MB

docker.io/rancher/mirrored-coredns-coredns 1.9.1 99376d8f35e0a 49.7MB

docker.io/rancher/mirrored-library-busybox 1.34.1 c98db043bed91 1.47MB

docker.io/rancher/mirrored-library-traefik 2.6.2 72463d8000a35 103MB

docker.io/rancher/mirrored-metrics-server v0.5.2 f73640fb50619 65.7MB

docker.io/rancher/mirrored-pause 3.6 6270bb605e12e 686kB

导出镜像

[root@k8s-master home]# ctr -n k8s.io images export alpine.tar docker.io/library/alpine:3.15 --platform linux/amd64

[root@k8s-master home]# ls -lh

total 171M

-rw-r--r--. 1 root root 5.7M May 13 19:44 alpine-3.15.tar

-rw-r--r--. 1 root root 5.7M May 13 20:01 alpine.tar

-rwxrwxrwx. 1 root root 35K May 8 00:18 install.sh

-rw-rw-rw-. 1 root root 160M May 7 20:45 k3s-airgap-images-amd64.tar.gz

-rw-r--r--. 1 root root 211 May 13 18:37 label-pod.yaml

drwx------. 15 master master 4.0K May 13 02:04 master

-rw-r--r--. 1 root root 143 May 13 18:22 my-pod.yaml

-rw-r--r--. 1 root root 217 May 13 18:53 my-service.yaml

[root@k8s-master home]# scp alpine.tar root@192.168.31.180:~

root@192.168.31.180's password:

alpine.tar

[root@k8s-work1 ~]# ls -lh

total 5.7M

-rw-r--r--. 1 root root 5.7M May 13 20:28 alpine.tar

-rw-------. 1 root root 2.8K May 8 00:08 anaconda-ks.cfg

-rw-------. 1 root root 2.1K May 8 00:08 original-ks.cfg

[root@k8s-work1 ~]# ctr -n k8s.io images import alpine.tar --platform linux/amd64

unpacking docker.io/library/alpine:3.15 (sha256:f85c40d9246ab4ff746abe8314c8c22d9720241bcf2244cabca3f66590a5fdd0)...done

[root@k8s-work1 ~]# crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/library/alpine 3.15 32b91e3161c8f 5.88MB

docker.io/library/nginx 1.22 0f8498f13f3ad 57MB

docker.io/library/nginx 1.23 a7be6198544f0 57MB

docker.io/rancher/klipper-helm v0.7.3-build20220613 38b3b9ad736af 239MB

docker.io/rancher/klipper-lb v0.3.5 dbd43b6716a08 8.51MB

docker.io/rancher/local-path-provisioner v0.0.21 fb9b574e03c34 35.3MB

docker.io/rancher/mirrored-coredns-coredns 1.9.1 99376d8f35e0a 49.7MB

docker.io/rancher/mirrored-library-busybox 1.34.1 c98db043bed91 1.47MB

docker.io/rancher/mirrored-library-traefik 2.6.2 72463d8000a35 103MB

docker.io/rancher/mirrored-metrics-server v0.5.2 f73640fb50619 65.7MB

docker.io/rancher/mirrored-pause 3.6 6270bb605e12e 686kB

金丝雀发布

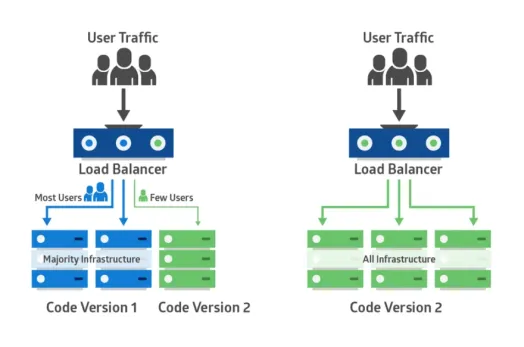

**金丝雀部署(canary deployment)**也被称为灰度发布。

早期,工人下矿井之前会放入一只金丝雀检测井下是否存在有毒气体。

采用金丝雀部署,你可以在生产环境的基础设施中小范围的部署新的应用代码。

一旦应用签署发布,只有少数用户被路由到它,最大限度的降低影响。

如果没有错误发生,则将新版本逐渐推广到整个基础设施。

部署过程

部署第一个版本

发布v1版本的应用,镜像使用nginx:1.22,数量为 3。

deploy-v1.yaml:

apiVersion: v1

kind: Namespace

metadata:

name: dev

--- # 配置多个对象需要用---分割

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-v1

namespace: dev

labels:

app: nginx-deployment-v1

spec:

replicas: 3

selector:

matchLabels: # 跟template.metadata.labels一致

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.22

ports:

- containerPort: 80

--- # 配置多个对象需要用---分割

apiVersion: v1

kind: Service

metadata:

name: canary-demo

namespace: dev

spec:

type: NodePort

selector: # 更Deployment中的selector一致

app: nginx

ports:

# By default and for convenience, the `targetPort` is set to the same value as the `port` field.

- port: 80

targetPort: 80

# Optional field

# By default and for convenience, the Kubernetes control plane will allocate a port from a range (default: 30000-32767)

nodePort: 30008

执行

[root@k8s-master canary-demo]# kubectl apply -f deploy-v1.yaml

namespace/dev created

deployment.apps/nginx-deployment-v1 created

service/canary-demo created

[root@k8s-master canary-demo]# kubectl get all -n=dev

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-v1-645549fcf7-2x5xw 1/1 Running 0 130m

pod/nginx-deployment-v1-645549fcf7-cfwfk 1/1 Running 0 130m

pod/nginx-deployment-v1-645549fcf7-wph5c 1/1 Running 0 130m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/canary-demo NodePort 10.43.129.224 <none> 80:30008/TCP 130m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deployment-v1 3/3 3 3 130m

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deployment-v1-645549fcf7 3 3 3 130m

[root@k8s-master canary-demo]# curl 10.43.129.224:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k8s-master canary-demo]# kubectl describe service canary-demo -n=dev

Name: canary-demo

Namespace: dev

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.43.129.224

IPs: 10.43.129.224

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30008/TCP

Endpoints: 10.42.1.20:80,10.42.1.21:80,10.42.1.22:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

创建Canary Deployment

发布新版本的应用,镜像使用docker/getting-started,数量为 1。

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-canary

namespace: dev

labels:

app: nginx-deployment-canary

spec:

replicas: 1

selector:

matchLabels: # 跟template.metadata.labels一致

app: nginx

template:

metadata:

labels:

app: nginx # 标签一直会自动加入到service中

track: canary

spec:

containers:

- name: new-nginx

image: docker/getting-started

ports:

- containerPort: 80

执行

[root@k8s-master canary-demo]# kubectl apply -f deploy-canary.yaml

deployment.apps/nginx-deployment-canary created

[root@k8s-master canary-demo]# kubectl get all -n=dev

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-v1-645549fcf7-2x5xw 1/1 Running 0 144m

pod/nginx-deployment-v1-645549fcf7-cfwfk 1/1 Running 0 144m

pod/nginx-deployment-v1-645549fcf7-wph5c 1/1 Running 0 144m

pod/nginx-deployment-canary-74577469b5-fxdkz 1/1 Running 0 58s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/canary-demo NodePort 10.43.129.224 <none> 80:30008/TCP 144m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deployment-v1 3/3 3 3 144m

deployment.apps/nginx-deployment-canary 1/1 1 1 58s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deployment-v1-645549fcf7 3 3 3 144m

replicaset.apps/nginx-deployment-canary-74577469b5 1 1 1 58s

调整比例

[root@k8s-master canary-demo]# kubectl scale deploy nginx-deployment-canary --replica3 -n=dev

deployment.apps/nginx-deployment-canary scaled

[root@k8s-master canary-demo]# kubectl get all -n=dev

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-v1-645549fcf7-2x5xw 1/1 Running 0 152m

pod/nginx-deployment-v1-645549fcf7-cfwfk 1/1 Running 0 152m

pod/nginx-deployment-v1-645549fcf7-wph5c 1/1 Running 0 152m

pod/nginx-deployment-canary-74577469b5-fxdkz 1/1 Running 0 8m36s

pod/nginx-deployment-canary-74577469b5-m65s2 1/1 Running 0 20s

pod/nginx-deployment-canary-74577469b5-lkzql 1/1 Running 0 20s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/canary-demo NodePort 10.43.129.224 <none> 80:30008/TCP 152m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deployment-v1 3/3 3 3 152m

deployment.apps/nginx-deployment-canary 3/3 3 3 8m36s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deployment-v1-645549fcf7 3 3 3 152m

replicaset.apps/nginx-deployment-canary-74577469b5 3 3 3 8m36s

[root@k8s-master canary-demo]#

下线旧版本

[root@k8s-master canary-demo]# kubectl scale deploy nginx-deployment-v1 --replicas=0 -n=dev

deployment.apps/nginx-deployment-v1 scaled

[root@k8s-master canary-demo]# kubectl get all -n=dev

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-canary-74577469b5-fxdkz 1/1 Running 0 15m

pod/nginx-deployment-canary-74577469b5-m65s2 1/1 Running 0 7m32s

pod/nginx-deployment-canary-74577469b5-lkzql 1/1 Running 0 7m32s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/canary-demo NodePort 10.43.129.224 <none> 80:30008/TCP 159m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deployment-canary 3/3 3 3 15m

deployment.apps/nginx-deployment-v1 0/0 0 0 159m

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deployment-canary-74577469b5 3 3 3 15m

replicaset.apps/nginx-deployment-v1-645549fcf7 0 0 0 159m

清空环境

kubectl delete all --all -n=dev

转载请注明来源,欢迎对文章中的引用来源进行考证,欢迎指出任何有错误或不够清晰的表达。可以在下面评论区评论,也可以邮件至 jungle8884@163.com